How Object Recognition Eliminates Bot Fragility & Delivers Change Resiliency

Innovate. Upgrade. Migrate. Refresh. Rapidly evolving technologies are both necessary and complex for any competitive business that wants to remain relevant and positioned for growth. And enterprise applications are at the center of the storm, leaving your mission-critical systems vulnerable.

The good news is, you can leverage automation to ensure your quality assurance, testing and quality engineering efforts remain battle-tested to weather that storm.

Below is an excerpt from Sogeti’s State of Artificial Intelligence Applied to Quality Engineering 2021-2022 report from Worksoft Chief Product & Strategy Officer Shoeb Javed’s chapter on “Self Healing Automation.” The excerpt describes the patented object action framework used by Worksoft Certify, the automation engine that drives pre-production test automation and post-production RPA in our Connective Automation Platform. The framework allows a single update to proliferate across the entire set of automation, allowing one update to one object to fix thousands of steps in hundreds of different processes.

Modeling an Enterprise Application: The Object-Action Framework

Instead of brittle bots that leave change to chance and cannot adapt, Worksoft’s change-resilient automation automatically replicates a change anywhere the object is used, saving time and resources.

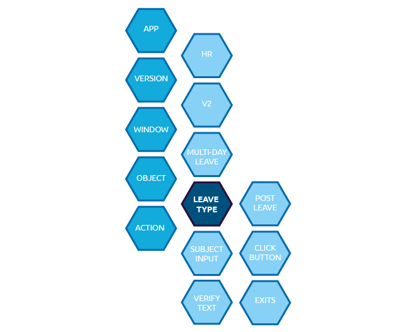

Bots interact with applications either via the application front end (browser, desktop app, mobile app, terminal emulator, etc.) or via APIs. The application can be broken down into components as shown below.

- App – Application name, e.g., Workday, Salesforce, etc.

- Version – Version of the application being used

- Window – Web page or application screen

- Object – An element on the screen, e.g., ‘Post

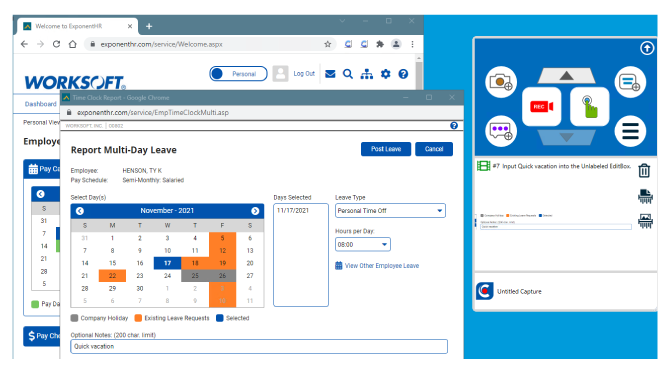

In the example in the graphic, we have an HR application that we want to automate. The automation steps then become:

In the example in the graphic, we have an HR application that we want to automate. The automation steps then become:

Go to ‘Report Multi-Day Leave’ [Window] page

Select [Action] ‘Personal Time Off’ in the ‘Leave Type’ [Object] dropdown

Press [Action] the ‘Post Leave’[Object] button

From the pattern above, the automation goes to an application window (‘Report Multi-Day Leave’ page), finds an object (‘Leave Type’ dropdown or ‘Post Leave’ button) and performs an action (select ‘Personal Time Off’ from ‘Leave Type’ dropdown or click ‘Post Leave’ button)

Each of the objects has a type: ‘button’, ‘dropdown’, ‘table’, etc.

Depending on the type of object, an action can be performed: ‘click’, ‘select’, ‘verify’, etc.

All windows (screens) and elements of an application can be modeled in this way. Some objects are more complicated than others, and therefore have more complex actions, but the pattern of interaction is the same.

The model is always separate from the automation. The automation steps reference the model that is stored separately. The beauty of this approach is that if an object changes, then only the model definition of the object needs to be updated. If that object is used by a thousand automation steps, then all of them get updated automatically.

The trick now is to find good definitions to consistently recognize application objects and update those definitions automatically when the application changes.

Modern Object Recognition Techniques

Recognizing objects on an application screen depends on the technology used to construct the application. In web applications, one can use the DOM (Document Object Model), HTML elements, JavaScript APIs, built-in application IDs or elements in the frameworks (AngularJS, UI5 from SAP, etc.) used to generate the web pages. In desktop applications built using .Net or Java introspection techniques using accessibility features of the underlying technology can be used to identify and interact with objects.

Machine vision is also emerging as a viable technology to recognize objects on a screen. Sometimes a combination of techniques can be used as fail-safe mechanisms in case one technique does not work consistently.

For more complex elements, composite objects can be created that combine more than one object and be recognized as a single logical object on which consistent actions can be performed.

Object definitions can be assigned to any object, whether simple or complex. A short list of examples of items that can be assigned a common object definition includes things like: Username Edit Box, Password Edit Box, Login button, Reset Password link, Sales Organization Edit Box, or the Table of Items on a Sales Order.

Having a database of object types and definitions allow new objects to be evaluated against those definitions using guided classification machine learning techniques that can automatically match new objects on the screen and classify them into the appropriate object type based on previous matches stored in the database.

Patterns in a specific application can also be used to train the model for the peculiarity of that application. Partial matching techniques can be used to locate objects even if a full match is not found.

Sophisticated object recognition is based on previous experience and real-world data collected in the field to make it more robust. This experience and collected data cannot be easily duplicated in a short time.

Extensibility: Making the Model Field Configurable for a Specific Application

Extensibility is a concept that allows object recognition to be tuned to a specific application. Applications have their own way of generating screens/pages and UI objects based on underlying frameworks that their developers use to generate the application user interface.

What this means is that the model can be explicitly trained to recognize certain patterns in the application user interface and then interpret those patterns as specific object types and actions that can be performed against them. This training can be done without writing code but by adding definition files that tell the model how to interpret the application screens or pages that it encounters.

This explicit training is very useful because it ‘sets the stage,’ so to speak, with a baseline upon which the implicit training and pattern recognition can build on, which greatly speeds up the process.

Using both explicit and implicit training ensures that object recognition is more consistent and therefore resilient to changes in the underlying application. For example, object recognition across multiple entirely different versions of an application can now be automated without requiring the automation builder to make any changes when they go from one version of the application to another.

Updating Application Models Dynamically

In the previous sections, we described how to model an application into its constituent windows, objects and actions. We further explained how objects can be consistently recognized and acted upon. Once this is done, it then becomes possible to update those definitions dynamically by recognizing changes in an application screen, matching those changes to the stored definitions and then updating the definitions incrementally and continuously as minor changes are observed.

This is done when automation is executing. Instead of just carrying out the requested actions, the automation engine now ‘looks around’ every time it lands on a screen or a page to see if new objects have been added or if its previous definition of existing objects need to be updated. Using pattern recognition and partial matching techniques it can identify objects that are ‘similar’ to its stored definitions and update them to match the changes it observes. This ‘looking around’ can be done each time the automation executes or can be done by executing the automation in an ‘update mode’ in order to avoid possible performance hits from the process. The preferable approach is to do it every single time the automation executes.

When automatic matching is not possible, the execution engine can prompt the user to provide a ‘guided match’ by explicitly assigning an observed object to a previous one. This helps train the model for the next time to become better and better as more executions are carried out.

Download the entire chapter on Self-Healing Automation or view the full report.